Conclusion: Disruptive Changes Created by UALink and OpenAI, and Cisco’s Leap Forward

OpenAI’s expanded strategic investments and the growing market influence of the UALink consortium provide long-term growth momentum for Cisco’s data center business.

- OpenAI’s transition to a ‘Public Benefit Corporation (PBC)’ and the announcement of a large-scale infrastructure investment of approximately $1.4 trillion are leading to an explosive increase in AI workloads.

- As UALink, an 'open connectivity standard,' becomes mainstream in the evolution of AI infrastructure, network infrastructure flexibility and scalability are emerging as top priorities.

- With essential connectivity, security, and optimized network architecture becoming indispensable, Cisco is expected to benefit from both competition and partnerships.

Background: OpenAI, UALink, and Changes in the Market Environment

1. OpenAI’s PBC Transition and $1.4 Trillion Infrastructure Investment Plan

In October 2025, OpenAI transitioned from its non-profit parent to a ‘Public Benefit Corporation (PBC)’ that combines public benefit and profit, actively pursuing new investments and data center expansion. CEO Sam Altman unveiled a large-scale infrastructure investment plan of approximately $1.4 trillion, which corresponds to about 30 gigawatts (GW) of data center capacity. This scale, capable of supplying about 7.5 million U.S. households, signifies an evolution beyond a mere software company to an 'AI infrastructure utility.'

According to OpenAI’s plan, the long-term goal is to establish a technical and financial framework capable of adding 1 gigawatt per week, strengthening strategic partnerships with AMD, Broadcom, NVIDIA, Oracle, and others. This massive infrastructure expansion is rapidly increasing demand for network, switching, routing, and storage equipment across the entire industry value chain.

2. UALink Consortium and AI Infrastructure Innovation

In response to NVIDIA’s proprietary NVLink, the UALink consortium (including AMD, Broadcom, Cisco, Google, HPE, Intel, Meta, Microsoft, etc.) has rapidly established an open AI interconnect standard. Officially launched in April 2025, the UALink 1.0 standard offers the following innovative features:

- 200Gbps throughput per lane (800Gbps bidirectional with 4 lanes)

- Round-trip latency less than 1 microsecond (100-150 nanoseconds port-to-port latency)

- Support for connecting up to 1,024 accelerators in a single fabric

- Maximized interoperability based on standard Ethernet SerDes

This allows various hardware and software vendors to freely participate in data center infrastructure and enables nascent AI companies to cost-effectively expand their infrastructure, creating a truly 'open ecosystem.'

Cisco’s Strategic Positioning and Growth Points

1. Securing Explosive AI Infrastructure Demand - Performance-Proven Results

Cisco has already demonstrated leadership in the AI infrastructure market with actual revenue performance.FY 2025 AI Infrastructure Order Status

- Total FY 2025 AI infrastructure orders: $2.0+ billion (more than double the initial target of $1.0 billion)

- Q4 FY 2025 stage: $800M in orders (up from $600M in Q3)

- Order composition: approximately 2/3 systems, 1/3 optics

This is not just revenue; it means that five webscale customers have adopted Cisco's products, and three of the top six webscalers recorded triple-digit growth rates. This scale, compared to NVIDIA’s approximately $5 billion in AI networking revenue in three months (Q4 FY 2025), indicates that Cisco is in the early stages, with a base for 50-100x growth over the next five years.

2026 Fiscal Year Growth Outlook

- FY 2026 revenue forecast: $59.0-$60.0 billion (+5% year-over-year)

- Increased share of AI infrastructure business: Over $1.0 billion already recognized as revenue

- First quarter (Q1 FY 2026) revenue estimate: $14.65-$14.85 billion

Despite Cisco’s cautious guidance, AI infrastructure order elasticity remains a situation not constrained by supply or demand. In other words, if Cisco can supply enough Silicon One chips and switching capacity, demand is likely to be much higher.

2. AI Cluster Optimization via RoCEv2 - Key to High-Performance, Low-Latency Communication

In AI data centers, high-speed communication between GPUs is a critical factor determining model training performance. Cisco is addressing this issue through RoCEv2 (RDMA over Converged Ethernet v2) technology.

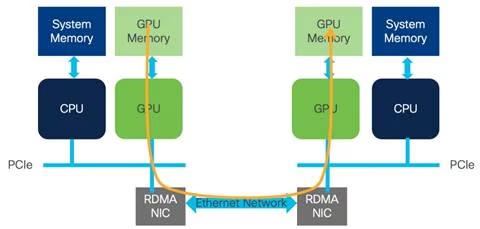

Importance of RDMA Technology

RDMA (Remote Direct Memory Access) is a proven technology that has long been used in high-performance computing (HPC) and storage networking. The key advantages of RDMA are as follows:

- Direct Memory Access: Direct data transfer between GPU memories without CPU intervention

- Ultra-Low Latency: Microsecond-level latency achieved by bypassing the operating system network stack

- High Throughput: Maximum efficiency achieved by offloading transfers to network adapter hardware

- Reduced CPU Overhead: Minimizes CPU utilization, allowing focus on AI model training

- Power Efficiency: Energy savings due to reduced CPU load

Emergence and Standardization of RoCEv2

Early RDMA implementations evolved primarily around InfiniBand (IB). While InfiniBand was ideal for high-performance computing environments, it came at the cost of building a separate network infrastructure in enterprise data centers.

RoCEv2 (RDMA over Converged Ethernet v2) solved this problem:

Evolution of RoCE

- RoCE v1 (2010): Operated only within the same Layer 2 network

- RoCEv2 (2014): Supported IP routing, enabling broader network expansion

RoCEv2 encapsulates the InfiniBand transport protocol with Ethernet, IP, and UDP headers, allowing all the benefits of RDMA to be leveraged over existing Ethernet network infrastructure. This means that high-performance AI infrastructure can be implemented in enterprise data centers without building a separate network.

Technical Requirements for RoCEv2

For RoCEv2 to achieve optimal performance, the following conditions must be met:

Lossless Fabric- ECN (Explicit Congestion Notification): Detects network congestion in advance and notifies the sender

- PFC (Priority Flow Control): Priority-based congestion control to protect critical traffic

Cisco’s Solution

- PFC and ECN technologies built into Silicon One chips

- Integrated queuing and congestion management in Nexus series switches

- QoS (Quality of Service) profiles optimized for AI workloads

Utilizing GPUDirect RDMA in AI Clusters

GPUDirect RDMA refers to direct memory communication between NVIDIA GPUs via RoCEv2. This enables:

- Improved Distributed Training Performance: Minimizes gradient synchronization latency between multiple GPUs

- Optimized AllReduce Communication: Eliminates bottlenecks in large-scale model training

- Maximized Scalability: Efficient management of hundreds to thousands of GPU clusters

Indeed, large AI companies like Meta and Google are adopting RoCEv2-based data center networking as a standard, and Cisco is their primary supplier.

3. Silicon One G200/G202 - Competitive Advantage of AI-Optimized Networking Chips

Cisco’s Silicon One G200/G202 ASIC offers the following technical advantages with its AI workload-optimized design:

Key Performance Indicators

- Throughput: 51.2 Tbps (significantly improved over previous generations)

- Capable of configuring 32K 400G GPU clusters in a 2-tier network

- 40% reduction in switches, 50% reduction in optics, 33% reduction in network layers

AI/ML Task Optimization

- 1.57x reduction in Job Completion Time (utilizing Enhanced Ethernet)

- Ultra-low latency + high-performance load balancing: Minimizes synchronization time for AI distributed training tasks

- Optimized AllReduce communication: Eliminates bottlenecks in large-scale model training

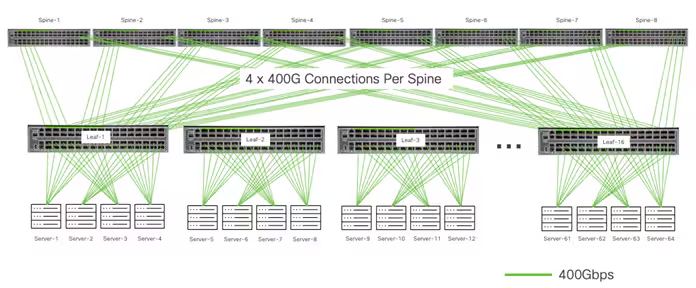

Cisco Backend Network Architecture

The architecture above demonstrates Cisco's principles for large-scale AI cluster design:

Hierarchical Structure

- Spine Layer: 8x 400Gbps port switches interconnect the entire cluster

- Leaf Layer: Each Leaf connects to the Spine with 4x 400G ports (eliminating oversubscription)

- Server Layer: GPU servers connect with 4x 400G ports

Advantages of this Structure

- Non-blocking Fabric: Enables full bandwidth communication between all servers

- Deterministic Performance: Ensures consistent network performance for all GPUs

- Linear Scalability: No performance degradation as cluster size increases

Such architectures are already being widely deployed by major webscalers like Meta, Google, and AWS, and supply shortages due to capacity constraints have become Cisco’s biggest “good problem.”

4. Strategic Partnership with NVIDIA: Secure AI Factory

In March 2025, Cisco and NVIDIA announced the Cisco Secure AI Factory with NVIDIA, which goes beyond simple technical collaboration to become a standard architecture for the enterprise AI market.

Key Areas of Collaboration

- NVIDIA Spectrum-X Ethernet Integration: Low-latency, high-speed connectivity between Cisco Nexus switches and NVIDIA GPUs

- Secure AI Factory Validated Reference Architecture: An enterprise AI infrastructure standard considering regulation and security

- Integrated Security: Cisco Hypershield + Cisco AI Defense to protect the entire AI workload stack

October 2025 Announcement - N9100 Data Center Switch

- First partner-developed switch based on NVIDIA Spectrum-X

- NVIDIA Cloud Partner compliant architecture for Neocloud and Sovereign Cloud deployments

This collaboration differentiates Cisco from existing competitors like Broadcom and NVIDIA, offering enterprise customers a balance of a trusted, closed security environment and open standards.

5. Direct Benefits from UALink and the Open AI Ecosystem

The emergence of UALink is accelerating the trend of 'open, high-performance connectivity' becoming a market standard between accelerators, storage, and networks within data centers. As a founding member of the UALink consortium, Cisco gains the following advantages:

Direct Benefits

- Expansion of the AI switch/router/interconnect market: Strengthens leadership in interoperability across multi-hardware environments

- Long-term support for multi-vendor, standards-based solutions as AI scale and complexity increase

- Lower entry barriers for the Sovereign Cloud market: Promotes the establishment of independent AI infrastructures in developing countries like the Middle East and Southeast Asia → Surging demand for Cisco network solutions

Indirect Benefits

- WebScale customers seeking supply diversification: Increased urgency to adopt Cisco due to reduced reliance on NVIDIA

- Lower infrastructure entry barriers for nascent AI startups and small-to-medium data center operators: Expanded scope of Cisco networking support

6. Middle East Sovereign AI Infrastructure - Geopolitical Growth Engine

Cisco is proactively securing the Middle East Sovereign Cloud market concurrently with its OpenAI investments.

Key Projects

Saudi Arabia - HUMAIN Partnership (May 2025)

- Establishment of Sovereign Cloud data centers in Riyadh and Jeddah

- Silicon One-based AI Fabric: Non-blocking architecture supporting 1PB/s bandwidth

- National Data Protection: Enables training on domestic datasets without data transfer abroad

- Alignment with Saudi Vision 2030: Supports the goal of nurturing 500,000 AI talents

UAE - G42 Partnership (October 2025)

- Construction of large-scale AI clusters: Based on AMD’s MI350X GPUs

- Integration of Regulated Technology Environment (RTE): Ensures compliance, security, and transparency

- Acceleration of US-UAE AI Cooperation: Strengthens Western-aligned AI infrastructure standards geopolitically

7. Advanced Software/Subscription-Based Business Model - Sustainable Growth

Cisco is continuously transitioning from a mere hardware company to a software platform company.

Splunk Acquisition (2024, $28B)

- Acquisition of a real-time data analytics platform

- Provides visibility and optimization solutions for AI infrastructure operational data

- Strengthens AI security threat detection and response

Software and Subscription Revenue Share

- Over 54% of total revenue is recurring revenue

- Reduced reliance on hardware cycles → Transition to a predictable growth model

This structure offsets the volatility of AI hardware cycles and signifies a transition to a high-margin business based on long-term customer relationships.

Specific Impact of OpenAI Investment: Network Infrastructure Demand Estimation

Networking Cost Structure Due to Large-Scale Data Center Construction

Among OpenAI’s $1.4 trillion infrastructure investment, the proportion of networking equipment is generally as follows:

Data Center Component Cost Distribution

- Computing (GPU/CPU): 50-60% ($700-$840B)

- Power and Cooling Infrastructure: 15-20% ($210-$280B)

- Networking and Storage: 15-20% ($210-$280B)

- Facilities and Other: 5-10% ($70-$140B)

- Switching/Routing: Centered around Cisco, Arista, Broadcom

- Optical Modules: Centered around Cisco, NVIDIA, Broadcom

- Current Cisco market share: Estimated at 30-35% (based on existing relationships)

In other words, OpenAI's project alone could generate potential revenue opportunities of $60-$100 billion for Cisco. This is equivalent to 1-2 times Cisco’s current annual revenue.

AI Infrastructure Investment Competition Among Large Cloud Providers

OpenAI’s moves have accelerated AI infrastructure investments by existing cloud companies like Microsoft, AWS, and Google:

- Microsoft: Accelerating its own data center expansion through realignment with OpenAI

- AWS: Expanding AI infrastructure based on its own chips (Trainium, Inferentia)

- Google: Pursuing large-scale expansion of TPU clusters

Total estimated capital expenditure (CapEx) for these three companies in 2025: approximately $80-$90 billion (+50-60% year-over-year)

This could increase the overall data center networking infrastructure market size by 50-70% annually, providing structural tailwinds for suppliers like Cisco.

Growth Scenarios and Stock Price Potential

Scenario 1: Conservative Scenario - Maintain Current Level + 10% Market Share Expansion

Assumptions

- FY 2026-2028 AI infrastructure revenue grows at an average annual rate of 40%

- Overall revenue growth rate of 5-7%

- Operating profit margin maintained at 22-25%

Results

- 2028 Revenue: $68-$72B

- 2028 EPS: $2.95-$3.20

- Target Stock Price (30x PER): $88.50-$96

Scenario 2: Base Scenario - UALink Standard Proliferation + Success in Middle East Sovereign Market

Assumptions

- FY 2026-2028 AI infrastructure revenue grows at an average annual rate of 60%

- Overall revenue growth rate of 8-10%

- Operating profit margin reaches 23-26% (Splunk integration complete)

- Market share of 40%+ secured in the Middle East Sovereign Cloud market

Results

- 2028 Revenue: $80-$88B (+40-55%)

- 2028 EPS: $3.60-$4.15

- Target Stock Price (35x PER): $126-$145

Scenario 3: Aggressive Scenario - Deepened Collaboration with OpenAI and Others + UALink Market Dominance

Assumptions

- FY 2026-2028 AI infrastructure revenue grows at an average annual rate of 100%

- Overall revenue growth rate of 12-15%

- Operating profit margin of 25-28%

- Cisco establishes itself as an “essential infrastructure partner” for major projects like OpenAI and G42

- AI infrastructure revenue accounts for 15-20% of total revenue

Results

- 2028 Revenue: $95-$105B (+70-85%)

- 2028 EPS: $4.60-$5.40

- Target Stock Price (40x PER): $184-$216

Risk Factors and Considerations

1. Increased Competition

- Broadcom, NVIDIA: Strengthening existing competitors as the AI networking market shifts

- New Entrants: Marvell, Juniper, etc., developing AI-specific chips

2. Delays in Technology Standardization

- UALink Adoption Speed: May not proceed as currently planned

- Coexistence with NVIDIA Spectrum-X: Dilemma of choice between InfiniBand-centric clouds vs. Ethernet-based

3. Supply Chain Constraints

- Silicon One Chip Shortage: TSMC capacity constraints could limit Cisco’s revenue growth

- Optical Module, Port Supply: Potential supply bottlenecks due to surging demand

4. Geopolitical Risks

- Middle East Project Political Risks: Delays in Sovereign Cloud investments due to worsening Middle East political situation

- US-China Technology Regulations: Reduced potential market due to exclusion from the Chinese market

Conclusion: Assessment of Cisco’s AI Infrastructure Growth Potential

Cisco is not merely a beneficiary of the AI infrastructure boom but a builder of the next-generation AI data center standard.

Key Success Factors

- Technological Leadership: Silicon One’s performance + RoCEv2-based GPU direct communication optimization + integration of security/openness through NVIDIA collaboration

- Market Timing: Synchronization of UALink standard proliferation and OpenAI’s large-scale investments

- Geopolitical Advantage: Proactive securing of the Middle East Sovereign Cloud market

- Business Model Evolution: Strengthening profitability through transition to a software/subscription-based model

2028 Target Stock Price

- Conservative: $88-$96

- Base: $126-$145

- Aggressive: $184-$216

Estimated 3-year annual return of 10-25% compared to current Cisco stock price.

Conclusion: Cisco is worth noting as a continuous beneficiary of the AI infrastructure boom from a different angle than AMD and NVIDIA, and is a balanced choice for investors seeking AI infrastructure exposure in their mid-to-long-term (3-5 years) portfolio. The standardization of RoCEv2 technology and the growth of the Sovereign Cloud market are expected to further strengthen Cisco’s long-term competitiveness.

Disclaimer: This article is for general informational purposes only and does not constitute investment advice. All investment decisions are the sole responsibility of the investor, and the author assumes no direct or indirect liability for any losses incurred as a result.

References

-

“OpenAI Wants to Get to $1 Trillion a Year in Infrastructure Spend” - Axios (2025년 10월 28일)

https://www.axios.com/2025/10/28/openai-1-trillion-altman -

“Cisco Reports Fourth Quarter and Fiscal Year 2025 Earnings” - Cisco Investor Relations (2025년 8월 13일)

https://investor.cisco.com/news/news-details/2025/CISCO-REPORTS-FOURTH-QUARTER-AND-FISCAL-YEAR-2025-EARNINGS/ -

“Cisco Q4 FY 2025 Delivers Solid Growth With AI and Networking Tailwinds” - Futurum Group (2025년 8월 15일)

https://futurumgroup.com/insights/cisco-q4-fy-2025-delivers-solid-growth-with-ai-and-networking-tailwinds/ -

“Cisco and G42 Deepen US-UAE Technology Partnership to Build Secure End-to-End AI Infrastructure in the UAE” - PR Newswire (2025년 10월 28일)

https://www.prnewswire.com/news-releases/cisco-and-g42-deepen-us-uae-technology-partnership-to-build-secure-end-to-end-ai-infrastructure-in-the-uae-302596515.html -

“UALink Consortium Reshapes AI Infrastructure Economics with Open Standard Initiative” - Insights from Analytics (2025년 6월 4일)

https://www.insightsfromanalytics.com/post/ualink-consortium-reshapes-ai-infrastructure-economics-with-open-standard-initiative